Common Misconceptions About AI Model Fine-Tuning Debunked

Understanding AI Model Fine-Tuning

Artificial Intelligence (AI) has rapidly evolved, enabling a wide range of applications across various industries. A crucial component of AI development is model fine-tuning, a process that tailors pre-trained models to specific tasks. However, there are numerous misconceptions surrounding this process, often leading to misunderstandings about its purpose and functionality. In this post, we'll debunk some of these common myths.

Misconception 1: Fine-Tuning is the Same as Training from Scratch

One prevalent misconception is that fine-tuning is synonymous with training a model from scratch. In reality, fine-tuning involves adjusting a pre-trained model's parameters to better suit a particular task. This approach leverages existing knowledge within the model, making it more efficient than building from the ground up. By using the foundational knowledge gained during initial training, fine-tuning can significantly reduce the time and resources required.

Misconception 2: Fine-Tuning Doesn't Require Expertise

Another myth is that fine-tuning can be performed without substantial AI expertise. While there are user-friendly tools available, understanding the nuances of model parameters, data preprocessing, and appropriate evaluation metrics requires a strong grasp of AI concepts. Experts play a crucial role in ensuring the process is conducted optimally, avoiding overfitting and underfitting issues that could compromise model performance.

Misconception 3: All Models Can Be Fine-Tuned Equally

A common belief is that all pre-trained models can be fine-tuned with the same ease and efficiency. The truth is that models vary significantly in their architecture and scope, affecting how they can be adapted to new tasks. Some models are designed with flexibility in mind, while others may require more substantial modifications. Evaluating a model's compatibility with the intended application is essential before embarking on fine-tuning.

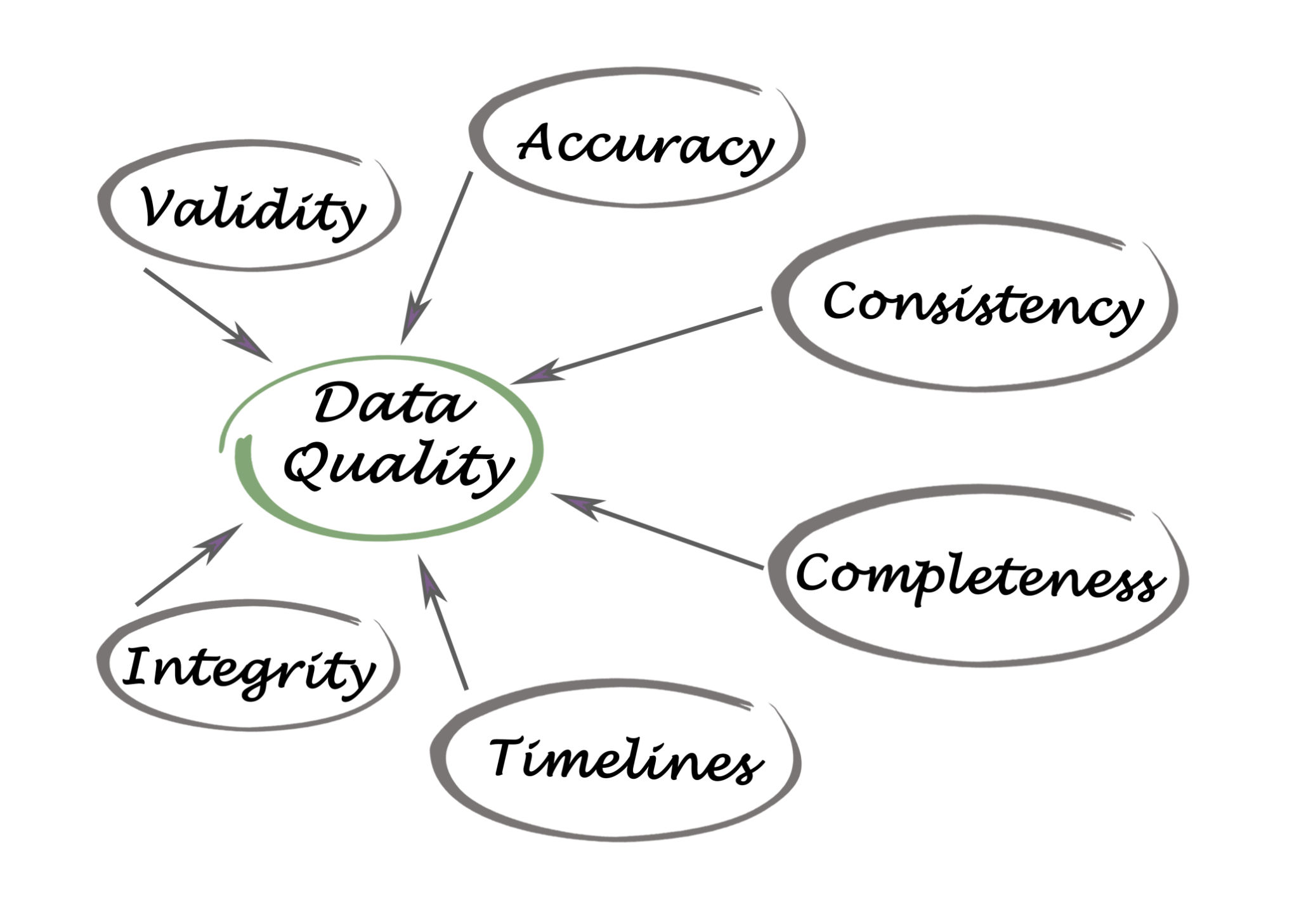

Misconception 4: Fine-Tuning Ignores Data Quality

There's a misconception that the quality of data used in fine-tuning is irrelevant since the model is already pre-trained. In fact, the data quality is crucial for achieving optimal results. High-quality datasets that are representative of the task at hand ensure that the model learns relevant patterns and nuances. Poor quality or biased data can lead to inaccurate predictions and reduced model efficacy.

The Benefits of Model Fine-Tuning

Fine-tuning offers several advantages that make it a popular approach in AI development:

- Efficiency: By building on existing models, fine-tuning reduces the time and computational resources needed.

- Customization: It allows models to be tailored to specific tasks or applications, enhancing their relevance and performance.

- Cost-Effectiveness: Fine-tuning can be more economical than training new models from scratch, especially for complex tasks.

Conclusion: Embracing Fine-Tuning with Clarity

In conclusion, understanding the intricacies of AI model fine-tuning helps dispel misconceptions and highlights its value in the AI landscape. As technology continues to evolve, staying informed about these processes ensures better decision-making and more effective AI implementations. Embracing fine-tuning with clarity will ultimately lead to more robust and versatile AI applications across industries.